Severe wildfires and smoke-borne pollution that are harmful to the environment and public health are on the rise globally, driven by rising temperatures and droughts. More over 290 million tons of carbon were released into the sky by wildfires in 2023, making it Canada’s worst wildfire season ever. The fire seasons in 2020 and 2021 in California also established records.

This pollutant has adverse effects that might be fatal or very bothersome. Millions of people experienced swollen noses, stinging eyes, and difficulty breathing as a result of the smoke from the Canadian wildfires, which spread as far as Portugal and Spain. This caused air quality alerts to be triggered in cities worldwide. According to estimates from the National Institutes of Health, air pollution causes 6.5 million deaths worldwide annually.

“Because exposure occurs gradually, it becomes harder to measure over time, even though we know that dangerous air quality levels pose a serious threat,” stated Marisa Hughes, assistant manager of the Human and Machine Intelligence program and climate intelligence lead at the Johns Hopkins Applied Physics Laboratory (APL) in Laurel, Maryland.

“A more accurate, higher-resolution model can help protect populations by providing them with information about air quality over time so that they can better plan ahead.”

Weather forecasting with intelligence

Artificial intelligence (AI) is being used by researchers at APL and the National Oceanic and Atmospheric Administration (NOAA) to run atmospheric models in order to gain a better understanding of the path and timing of smoke pollution. In the end, this family of APL projects will assist forecasters in providing earlier, more precise, and high-resolution predictions of the movements and evolution of air quality concerns, such as wildfire plumes.

Discover how Artificial Intelligence is revolutionizing pollution predictions with our latest blog post. Stay informed and learn how AI is making a positive impact on the environment. Read more at: https://t.co/wf5axfA9dD

— Beyond The Ai – News (@BeyondTheAiNews) January 30, 2024

The models used by current weather forecasting techniques generate simulations of future weather events by calculating vast amounts of data, including air temperature, pressure, and composition, using intricate equations that obey the laws of physics, chemistry, and atmospheric transport.

Each model has a prediction period known as a timestep; in order to forecast for multiple timesteps further into the future, the models require additional processing power, data, and time to thoroughly examine every variable.

In our instance, the models examine the sequential movement of about 200 distinct contaminants in the atmosphere for each timestep. That’s around 40% of their work,” senior APL AI researcher Jennifer Sleeman, the principal investigator, stated.

The chemistry accounts for about 30% of the computation, so they also need to take into account how these chemicals are decomposing and reacting with one another. Using all of the variables, air quality forecasting requires a substantial amount of processing power.”

One model run is insufficient for forecasting. To account for potential changes in conditions, such as a cold spell or an oncoming pressure system, researchers employ a technique called ensemble modeling. then run anywhere from a few to hundreds of variants of models, and then use the mean of those variations for forecasting.

It takes a lot of computing power to operate one model; just think about running fifty or more. Sometimes the cost and availability of computing just make this impractical,” Sleeman stated.

This is where the forecast’s speed and accuracy are enhanced by APL’s AI-assisted approach. The group created deep learning models that use fewer, shorter timesteps of input to simulate ensembles.

“The amount of computation we could save with our networks is tremendous,” stated Sleeman. “We’re speeding things up because we’re asking the models to compute shorter timesteps, which is easier and faster to do, and we’re using the deep-learning emulator to simulate those ensembles and account for variations in weather data.”

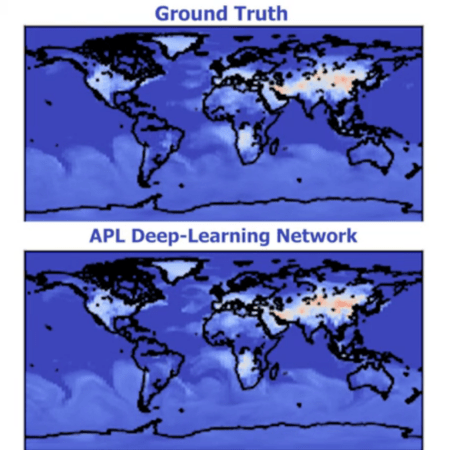

Application of the model to NASA’s GEOS Composition Forecasting (GEOS-CF) system was done by APL researchers in collaboration with Morgan State University, NASA, and NOAA. Using a resolution of 25 kilometers, or about 15 square miles, the GEOS-CF generates a five-day global composition forecast every day.

After being trained on more than thirty ensemble simulations of a GEOS-CF-like system over a one-year period, the deep learning model has consistently generated 10-day forecasts that closely match real data. The deep-learning emulation produced correct forecasts using just seven timesteps encapsulating 21 hours of input data, whereas standard models can require months or more of data to make estimations. Through speedier analysis of these models, scientists have prepared the way for higher resolution forecasting.

Sleeman recently spoke to the American Meteorological Society, the American Geophysical Union Conference, and the Fall Symposium of the Association for the Advancement of Artificial Intelligence about the team’s findings and other climate research supported by AI.

Also Read

I thoroughly enjoyed your work presented here. The sketch is appealing and your written material is stylish. However, you may develop a sense of apprehension regarding the delivery of the following; however, you will almost certainly return frequently if you safeguard this journey.